#Regularization Techniques

Explore tagged Tumblr posts

Text

An Introduction to Regularization in Machine Learning

Summary: Regularization in Machine Learning prevents overfitting by adding penalties to model complexity. Key techniques, such as L1, L2, and Elastic Net Regularization, help balance model accuracy and generalization, improving overall performance.

Introduction

Regularization in Machine Learning is a vital technique used to enhance model performance by preventing overfitting. It achieves this by adding a penalty to the model's complexity, ensuring it generalizes better to new, unseen data.

This article explores the concept of regularization, its importance in balancing model accuracy and complexity, and various techniques employed to achieve optimal results. We aim to provide a comprehensive understanding of regularization methods, their applications, and how to implement them effectively in machine learning projects.

What is Regularization?

Regularization is a technique used in machine learning to prevent a model from overfitting to the training data. By adding a penalty for large coefficients in the model, regularization discourages complexity and promotes simpler models.

This helps the model generalize better to unseen data. Regularization methods achieve this by modifying the loss function, which measures the error of the model��s predictions.

How Regularization Helps in Model Training

In machine learning, a model's goal is to accurately predict outcomes on new, unseen data. However, a model trained with too much complexity might perform exceptionally well on the training set but poorly on new data.

Regularization addresses this by introducing a penalty for excessive complexity, thus constraining the model's parameters. This penalty helps to balance the trade-off between fitting the training data and maintaining the model's ability to generalize.

Key Concepts

Understanding regularization requires grasping the concepts of overfitting and underfitting.

Overfitting occurs when a model learns the noise in the training data rather than the actual pattern. This results in high accuracy on the training set but poor performance on new data. Regularization helps to mitigate overfitting by penalizing large weights and promoting simpler models that are less likely to capture noise.

Underfitting happens when a model is too simple to capture the underlying trend in the data. This results in poor performance on both the training and test datasets. While regularization aims to prevent overfitting, it must be carefully tuned to avoid underfitting. The key is to find the right balance where the model is complex enough to learn the data's patterns but simple enough to generalize well.

Types of Regularization Techniques

Regularization techniques are crucial in machine learning for improving model performance by preventing overfitting. They achieve this by introducing additional constraints or penalties to the model, which help balance complexity and accuracy.

The primary types of regularization techniques include L1 Regularization, L2 Regularization, and Elastic Net Regularization. Each has distinct properties and applications, which can be leveraged based on the specific needs of the model and dataset.

L1 Regularization (Lasso)

L1 Regularization, also known as Lasso (Least Absolute Shrinkage and Selection Operator), adds a penalty equivalent to the absolute value of the coefficients. Mathematically, it modifies the cost function by adding a term proportional to the sum of the absolute values of the coefficients. This is expressed as:

where λ is the regularization parameter that controls the strength of the penalty.

The key advantage of L1 Regularization is its ability to perform feature selection. By shrinking some coefficients to zero, it effectively eliminates less important features from the model. This results in a simpler, more interpretable model.

However, it can be less effective when the dataset contains highly correlated features, as it tends to arbitrarily select one feature from a group of correlated features.

L2 Regularization (Ridge)

L2 Regularization, also known as Ridge Regression, adds a penalty equivalent to the square of the coefficients. It modifies the cost function by including a term proportional to the sum of the squared values of the coefficients. This is represented as:

L2 Regularization helps to prevent overfitting by shrinking the coefficients of the features, but unlike L1, it does not eliminate features entirely. Instead, it reduces the impact of less important features by distributing the penalty across all coefficients.

This technique is particularly useful when dealing with multicollinearity, where features are highly correlated. Ridge Regression tends to perform better when the model has many small, non-zero coefficients.

Elastic Net Regularization

Elastic Net Regularization combines both L1 and L2 penalties, incorporating the strengths of both techniques. The cost function for Elastic Net is given by:

where λ1 and λ2 are the regularization parameters for L1 and L2 penalties, respectively.

Elastic Net is advantageous when dealing with datasets that have a large number of features, some of which may be highly correlated. It provides a balance between feature selection and coefficient shrinkage, making it effective in scenarios where both regularization types are beneficial.

By tuning the parameters λ1 and λ2, one can adjust the degree of sparsity and shrinkage applied to the model.

Choosing the Right Regularization Technique

Selecting the appropriate regularization technique is crucial for optimizing your machine learning model. The choice largely depends on the characteristics of your dataset and the complexity of your model.

Factors to Consider

Dataset Size: If your dataset is small, L1 regularization (Lasso) can be beneficial as it tends to produce sparse models by zeroing out less important features. This helps in reducing overfitting. For larger datasets, L2 regularization (Ridge) may be more suitable, as it smoothly shrinks all coefficients, helping to control overfitting without eliminating features entirely.

Model Complexity: Complex models with many features or parameters might benefit from L2 regularization, which can handle high-dimensional data more effectively. On the other hand, simpler models or those with fewer features might see better performance with L1 regularization, which can help in feature selection.

Tuning Regularization Parameters

Adjusting regularization parameters involves selecting the right value for the regularization strength (λ). Start by using cross-validation to test different λ values and observe their impact on model performance. A higher λ value increases regularization strength, leading to more significant shrinkage of the coefficients, while a lower λ value reduces the regularization effect.

Balancing these parameters ensures that your model generalizes well to new, unseen data without being overly complex or too simple.

Benefits of Regularization

Regularization plays a crucial role in machine learning by optimizing model performance and ensuring robustness. By incorporating regularization techniques, you can achieve several key benefits that significantly enhance your models.

Improved Model Generalization: Regularization techniques help your model generalize better by adding a penalty for complexity. This encourages the model to focus on the most important features, leading to more robust predictions on new, unseen data.

Enhanced Model Performance on Unseen Data: Regularization reduces overfitting by preventing the model from becoming too tailored to the training data. This leads to improved performance on validation and test datasets, as the model learns to generalize from the underlying patterns rather than memorizing specific examples.

Reduced Risk of Overfitting: Regularization methods like L1 and L2 introduce constraints that limit the magnitude of model parameters. This effectively curbs the model's tendency to fit noise in the training data, reducing the risk of overfitting and creating a more reliable model.

Incorporating regularization into your machine learning workflow ensures that your models remain effective and efficient across different scenarios.

Challenges and Considerations

While regularization is crucial for improving model generalization, it comes with its own set of challenges and considerations. Balancing regularization effectively requires careful attention to avoid potential downsides and ensure optimal model performance.

Potential Downsides of Regularization:

Underfitting Risk: Excessive regularization can lead to underfitting, where the model becomes too simplistic and fails to capture important patterns in the data. This reduces the model’s accuracy and predictive power.

Increased Complexity: Implementing regularization techniques can add complexity to the model tuning process. Selecting the right type and amount of regularization requires additional experimentation and validation.

Balancing Regularization with Model Accuracy:

Regularization Parameter Tuning: Finding the right balance between regularization strength and model accuracy involves tuning hyperparameters. This requires a systematic approach to adjust parameters and evaluate model performance.

Cross-Validation: Employ cross-validation techniques to test different regularization settings and identify the optimal balance that maintains accuracy while preventing overfitting.

Careful consideration and fine-tuning of regularization parameters are essential to harness its benefits without compromising model accuracy.

Frequently Asked Questions

What is Regularization in Machine Learning?

Regularization in Machine Learning is a technique used to prevent overfitting by adding a penalty to the model's complexity. This penalty discourages large coefficients, promoting simpler, more generalizable models.

How does Regularization improve model performance?

Regularization enhances model performance by preventing overfitting. It does this by adding penalties for complex models, which helps in achieving better generalization on unseen data and reduces the risk of memorizing training data.

What are the main types of Regularization techniques?

The main types of Regularization techniques are L1 Regularization (Lasso), L2 Regularization (Ridge), and Elastic Net Regularization. Each technique applies different penalties to model coefficients to prevent overfitting and improve generalization.

Conclusion

Regularization in Machine Learning is essential for creating models that generalize well to new data. By adding penalties to model complexity, techniques like L1, L2, and Elastic Net Regularization balance accuracy with simplicity. Properly tuning these methods helps avoid overfitting, ensuring robust and effective models.

#Regularization in Machine Learning#Regularization#L1 Regularization#L2 Regularization#Elastic Net Regularization#Regularization Techniques#machine learning#overfitting#underfitting#lasso regression

0 notes

Text

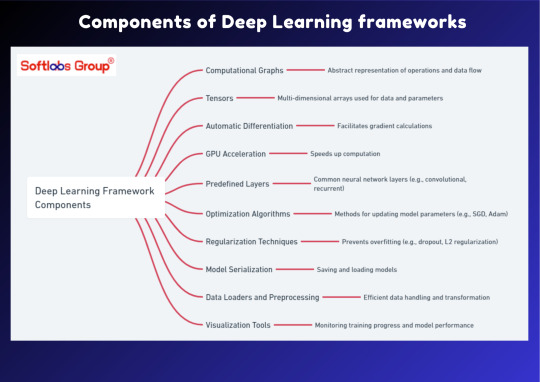

Discover the core components of Deep Learning frameworks with our informative guide. This simplified overview breaks down the essential elements that make up DL frameworks, crucial for building and deploying advanced neural network models. Perfect for those interested in exploring the world of artificial intelligence. Stay informed with Softlabs Group for more insightful content on cutting-edge technologies.

0 notes

Text

Kiba and the fever dream that were the Team 8 focused second chuunin exam episodes,,,,

#naruto#kiba inuzuka#team 8#they started strong with his silly ass hallucinating naruto and finished stronger with the mole guy questioning what the fuck he's saying#also . i am shaking and begging for kiba ro just get a filler technique where he turns into a dog . please .#don't bite people with your regular teeths#but yeah me personally big fan of this sorta narukiba dynamic (naruto is not even there and kiba is so sure he's being normal)#because who just makes up an image of their buddy sweaty cocky just mesh shirt on to conjure while . smelling grass#naruto credentials

104 notes

·

View notes

Text

I love the little group of misfits we got to see at the beginning of book ten!

#The fact that they had practiced fighting techniques#That involved dropping trees on people#Windwalker is so sweet#Wodensfang trying to teach toothless manners#Toothless missing the point and just adding in random “polite” words to his regular rude talk#Hiccup accidentally speaking dragonese to his mom#That one is kinda sad though#httyd books#hiccup horrendous haddock iii#Book Toothless#book hiccup#windwalker#wodensfang#how to seize a dragon's jewel

621 notes

·

View notes

Text

bingmaid

#the system has a gift for sqq#maidhe has been on the mind#luo binghe#mxtx svsss#svsss#luo bingmei#scum villains self saving system#tested a few drawing techniques!#this is gradient mapping with my regular round brush#bc it gives him a rounder cuter vibe

977 notes

·

View notes

Text

there are only 8 seams in this knit dress but at this rate each one's going to take me 2 hours

#my machine just loves chewing this pointelle up#i've got the walking foot installed and paper as stabilizer and i'm leaving the thread tails long so i can hold them to start the seam#and i've got my machine going at a max speed of medium-low bc of the walking foot and the chewing#sooo jealous of the sewalong where they're using a regular foot and doing nothing special while using the same exact fabric i'm using#oh and i still haven't gotten a new iron so i'm still using the spray-bottle-then-iron technique bc i have no access to steam#and each seam has to be 'steam[ed] generously'

55 notes

·

View notes

Text

One thing that's fucking hilarious about the modao-guidao distinction is how cql just fucking wrecks it. Because it was apparently sooooo scary and immoral for wwx to raise his friend from the dead they had to assure us that Wen Ning was simply grievously injured and never fully died so no that guy walking around deathly pale with black marks on his neck isn't a corpse, thank you very much. But because Zixuan's gotta die Wen Ning can still be controlled via evil music which means Wei Wuxian has now turned a living human being into a puppet. The literal definition of modao.

I mean they also made him not the inventor of it, so I guess the title grandmaster of demonic cultivation is still inaccurate just like it was for the books, just in a different way lmao.

#for the record this is why i dont like the idea of 'wwx is good BECAUSE he doesn't use modao. guidao is objectively more moral'#cql!wwx is objectively more woobified and less morally grey than book!wwx but he IS a demonic cultivator#the idea that guidao is an inherently more innocent and respectful technique is bullshit imo#modao can be used perfectly innocently- like xql! wwx healing his friend‐ and guidao can be used horifically. as can regular cultivation!#mdzs#mdzs meta#the untamed#cql#wei wuxian

142 notes

·

View notes

Text

honestly homophobic of netflix to remove the interactive with the julethief canon events in it

AND ON THE DAY CARMEN ESCAPES VILE NO LESS 😒

rip To Steal or Not To Steal. you had the entire fandom in a chokehold over that ending <3

#Netflix. Netflix when I catch you. WHEN I CATCH YOU NETFLIX.#Julia blushing is canon in our hearts <33#so many good interactions :(#had to play it one last time on the proper platform and noticed so. many. little. characterization bits.#like mine bomb choking and Carmen DOESNT HESITATE to help. also Carmen knowing the heimlich.#VILE teaching life saving techniques??? hmm suspicious (yeah first aid in the field they don’t want ops to die lmao)#ALSO CARMENS IMAGINATION?? like girly was in the middle of a mission pretending she was showing off sick dance moves to a pretty girl.#what a dork I love her. she cannot focus <3 it’s ok Carmen we all imagine showing off to a crowd of people and them joining in.#bellum not being able to stick to a name for the wiper/mind melt/cranial dranial#also carmen quoting Casablanca??! oh my god?? the layers??!#URHG WE NEEDED FILLER EPS. where’s my team red movie night. beach day. arcade trip. julethief coffee/museum/aquarium dates.#NETFLIX LET ME IN THAT WRITERS ROOM.#alright streaming services. the only reason we got u in the first place was because it was *slightly* more convenient than piracy.#excuse my Texas™️ here but the streaming platforms are getting a little too big for their britches nowadays.#could we at least consolidate into 1-2. this is getting ridiculous.#also 482 unskippable ads every 5 seconds. they’ve made regular cable but 200x worse#but that’s a topic for another day#anyways. goodbye To Steal or Not to Steal. you changed my brain chemistry at age 15 🫡#carmen sandiego 2019#carmensandiego#tsonts#fluffytheocelot#julethief#to steal or not to steal

55 notes

·

View notes

Text

was trying to explain to my dad why a Na'vi character wouldn’t be considered a fursona, here’s how I think of the distinction for anyone who cares lol

A fursona is a furry character used to represent oneself in some way shape or form. Furry, in this context, is an umbrella term to describe anthropomorphized animal characters (and/or people who enjoy the concept of such characters).

Anthropomorphism exists on a scale of course, it can be as simple as giving a regular animal more humanized thoughts and feelings, or it can go all the way up to “this is basically a human, walking talking wearing clothes having a job etc, but with fur”.

But anyways, a furry character is an animal that has been given human characteristics that its species does not usually have (in other words, anthropomorphized). A talking cat, a wolf that walks on two legs, a lizard with a day job, etc. Granted, I suppose this line can get a bit blurred with fictional species—for example, are dragons innately sapient? idk it depends on the setting—but generally if the species is considered an animal by default, then it can qualify as furry.

The Na'vi—like other fantasy humanoids such as elves, dwarves, trolls, etc.—are not animals. They speak and walk upright and wear clothes etc. not because we’ve anthropomorphized/humanized them, but because that’s just how their species innately is. There is no regular “feral” version of the Na'vi who walk on four paws, can’t use language, aren’t sapient*, etc. like there would be with a regular cat or wolf. Therefore a Na'vi is not a furry, and therefore cannot be a fursona. There’s certainly an argument to consider them furry-adjacent given their feline-esque features, but they don’t fall under the direct category of furry.

Now, you can absolutely have a Na'vi character that represents you of course!! A Na'vi-sona, if you will. I have one! It just wouldn’t be a “fursona” by definition.

*reminder before someone objects here that sapience is not the same thing as sentience. Sentience is simply an awareness of oneself; most animals are sentient. Sapience, on the other hand, is human-level intelligence and, with it, the capacity for morality. Humans are the only known real-world sapient species; in fiction there are of course several more

#this is not said to dunk on furries or to be a “furry in denial” or whatever#i have a fursona and even a fursuit and go to cons etc i ain't denying the lable lol#but as a furry myself I just genuinely consider calling the Na'vi “furries” to be legitimately categorically inaccurate ¯\_(ツ)_/¯#furry-adjacent sure! but not furries themselves#that said...I HAVE vaguely considered making a Na'vi-inspired fursuit someday...#not a true Na'vi; I think that would be extremely uncanny in fursuit form#a regular Na'vi would be far better suited to cosplay making techniques rather than fursuit making techniques#but a more cat-ified derived version...could maybe work 👀#would be soooo much work though...my hand aches just thinking about all sewing in all those stripes 😅#well who knows#if AWU ever decides to have an alien-themed year I might just have to do it ¯\_(ツ)_/¯ we'll see hrh#LED sanhì would also hypothetically look AMAZING but that's faaaar beyond my technical capabilities lol

25 notes

·

View notes

Text

so he just wants to eat me out and doesn't want penetrative sex or reciprocal oral or a relationship or anything, just literally wants to eat me out for hours and 'hone his craft'..................... am I nuts to be considering it

#this is an old friend i used to study with who recently got a job here and moved to a town 20min away by bus#i let him once. he's ok at it. i do think dedicated practice would be good for him and for whoever his next meal is.#and when he did last time his enthusiasm and endurance nearly made up for the faults in his technique#maybe im trying to rationalise it because I just want my pussy ate on the regular and the earliest thatd happen again otherwise is winter#and ngl conceptually it's pretty hot. if he does train up then this could be a great investment.#it's not like im going to date again before finishing my thesis so...#but i also had a friend who enjoyed a similar deal with her ex and i ended up feeling put off by how that dynamic turned out by the end#hmmm. things to think about while daydreaming about grinding his face into a smooth sanded plane using only my pelvis.

30 notes

·

View notes

Text

self portrait using menstrual blood and spit

#not a great likeness or great technique really just wanted to try it hehe#but it was interesting to work with since it’s so much goopier than regular blood#anywayyyy#my art

71 notes

·

View notes

Text

my own tags on my last post made me think abt it and i don't think I'd classify hisoka as Cruel. he's Evil, but I wouldn't call him Cruel. maybe i just forgot some very important plot points, and like, don't get me wrong, i do think he very well possesses the Potential to be cruel to others whenever he thinks it might be fun and/or useful, but from what we see explicitly, if i had to put characters on some sort of--

hold on.

this. this is what's in my head.

#and ig then you can talk abt Intention. bc i don't think illumi sees himself as cruel re: killua.#mainly bc that's just his brother. that's not a well-rounded regular person you Can be cruel to.#he's just the guy they're training into an assassin and he acts out and you gotta be careful.#and there's techniques you can make use of to perform that carefulness and to mold him into what you want this barely-a-person to be.#but regardless of that he's so fucking cruel.#and man i don't know but this rly stuck out to me while watching the hunter exam episode this morning#and then listened to jack - oblivious to this as of then - ask the others who is more evil between the two.#ok i need to stop thinking abt them.#*#image#shoop.lb#undescribed

15 notes

·

View notes

Text

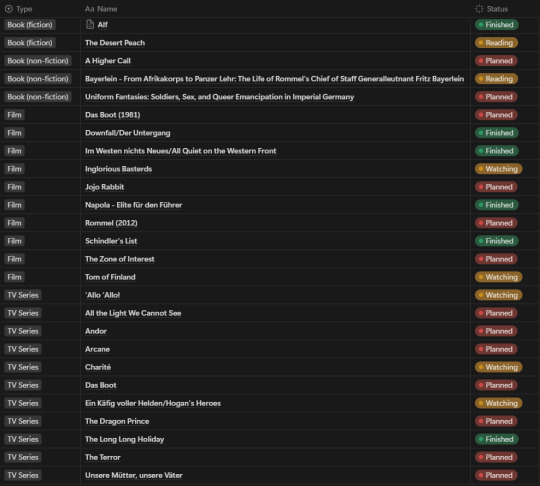

How long do you want your title to be? Historical non-fiction: y e s

Last year I actually started making a proper list of films, series etc that I want to watch - something I've been wanting to do for a long time but never did. That probably caused me to forget about things more than once😂

I mainly started it for historical movies at first, because my history phase that I had when I was about 14 or 15 came back big time, and there's been some things I've wanted to watch since then already but still didn't! I knew about Downfall since like 2017 or so but didn't watch the full movie until last year😅 I'm having a lot of fun with it though since I've of course grown and developed through these past few years and have now more knowledge in general, more experience on how to do research and also a more reflective and mature thinking. Of course not all historic/history-inspired media is accurate (and I don't think it has to be for every purpose, like Hogan's Heroes is still incredibly funny even if the timeline doesn't always makes sense or ranks and uniforms don't match up). But I think it can be a great starting point for getting interested in a specific person or event and then delving into more detailed research about it.

I've also added some newer ones though that I didn't know about back then, or that didn't even exist yet. The list is really a great help for organising my brain. I've also started to add in things not related to history though (like Arcane - I still haven't watched season 2 rip - or The Dragon Prince). I wasn't sure if I should make a separate list for them but at least for now I just threw everything together in one. It helps a lot especially for series because I try to always write down which season and episode I'm at (I just didn't include that column in the screenshot) because sometimes I get distracted by something else and don't continue for a while and then forget where I was at😭

This was just a bit of random babbling but like this is kinda what I've been up to. I haven't actually continued on that many things in a while because I was too stressed with university, but I hope to continue soon. Also if you have more recommendations you're welcome to let me know👀 I'm currently mostly interested in the World Wars (there's so much to be learned about both but I think I really want to broaden my knowledge on WWI), the Weimar Republic/interwar period in general (also Austrian interwar history of course but to be honest I don't know if there's actually ANY movies on that), but also pre-WWI Austria-Hungary and the German Empire and like generally the 18th/19th/20th century (other countries too of course, but with these two I have many historical sites basically in front of my door, and as a native speaker a lot of primary sources are also relatively easy accessible to me). There is so much interesting stuff in history as a whole (I love watching historical documentaries about like any time period or country, there's always something fascinating to learn), these are just the ones I'm most focused on at the moment and want to study in a bit more detail!

#I don't know if I'll post a lot about it but like maybe a little bit! at least those historical media that are more heavily fictionalised#of course this doesn't mean I'm abandoning self shipping or other fandoms in any way#but I've always used my blog for more than just one specific topic or interest and will continue to do so#and sometimes there are even ways to intertwine them like I love imagining infodumping on my f/os and telling them obscure history facts!!#I just really love being passionate about things!#I also want to get more into historical costuming (I already enjoy 'regular' cosplay even though i barely have one finished costume😂)#but I also love learning about the crafting techniques people used back then#I actually started working on an 18th century dress last year but still have barely finished the underdress rip#hope to pick it up again because I really miss sewing!#it's the patterning and planning that stresses me out so much but the actual sewing part (especially by hand) can be so calming actually#but i digress#I'm not actually sure where I was going with this whole post I just wanted to talk about interests#history#historical fiction#selnia talks

8 notes

·

View notes

Text

Give Mae a purple lightsaber…and, no, not just for aesthetic purposes.

#feel free to tell me if I’m tripping (but don’t be a jackass about it I’m actually super in love w/ this idea)#it fits okay#she’s going her own way…not quite in the dark but not in the light either#she's toeing the line (channeling upon the darkness but not allowing it to consume her)#she deserves the world#AND a purple lightsaber#mae aniseya#jedi!mae#except not really but yk#mae ho aniseya#star wars: the acolyte#and let it look like a broad sword (a callback to the chain mail in her armor & the warriors of her ‘coven’/Koril)#maybe— I’m still not opposed to her having a regular saber but just duel wielding shoto blades#she fights utilizing juyo techniques too#the acolyte#juyo#thoughts#+w#sage speaks

12 notes

·

View notes

Text

OMGGGG TIEN !!!!!!!!!! YAYYAYAAYYAYA (DB ep 121 spoilers)

THIS WAS SOOO HYPE AHHHHHHHHHHHHH FANTASTIC MOMENT FOR TIEN FANS

he was relegated to sideline commentator most of the fight and iwas like "sadge 😔" BUT THEN HE COMES IN WITH THE FUCKIN SAVE!!!!!! YEAH!!!!!!!!!!!! YEAHHHHH

Why havent goku and co like, asked Tien how he does that. Especially with Gokus habit of mimicking techniques, you would think they would have shown some curiosity in learning that technique by now.

Whatever its fine we got a top tier tien moment i am LIVING

#dragon ball#db caps#tenshinhan#tien shinhan#i think i came across a screencap of tien with like yamcha or something#and he was saying “alright i guess i will teach you the levitation technique”#so i guess it happens eventually??#lol spoilers#getting spoiled on a regular basis#but im very glad this scene wasnt spoiled for me#very pog#we even got a “BIIII😝”#thats how you know its peak#also surprised goku didnt bring any senzu with him from karins place#db ep 121

12 notes

·

View notes

Text

Chakra as a type of energy like heat or electricity. Let me cook(literally, I have a fever)

Chakra is produced by living organisms. Plants and animals. If you want to channel chakra through a substance, you need some kind of organic material. Animals and plants in the dirt, bacteria in the water, etc. etc. What if chakra metal was some kind of carbon-steel. Organic material to channel the yin aspect, conductive metal to channel the Yang aspect. Not enough organic material the chakra won’t take, and too much it would just burn up.

Blacksmiths from idk the land of Iron or lightning have special secret ratios for their chakra metal. Maybe a specific kind of atomic matrix prevents energy loss and holds a technique longer after you imbue it. Making it highly efficient and sought after by other countries, which do have chakra metal but it’s not the BEST, ya know? Somebody with a Chakra weapon from Iron or Lightning(I haven’t decided yet. Or maybe they have a rivalry. That would be funny) would be the envy of any warrior.

#Naruto worldbuilding#Naruto#tbh I feel like I got to regular ass sword making techniques with extra steps but what ever#it’s about the theory behind it

12 notes

·

View notes